This article is part of the Understanding Money Mechanics series, by Robert P. Murphy. The series will be published as a book in late 2020.

To fully understand our current global monetary system, in which all of the major powers issue unbacked fiat money, it is helpful to learn how today’s system emerged from its earlier form. Before fiat money, all major currencies were tied (often with interruptions due to war or financial crises) to one or both of the precious metals, gold and silver. This international system of commodity-based money reached its zenith under the so-called classical gold standard, which characterized the global economy from the 1870s through the start of World War I in 1914.

Under a genuine gold standard, a nation’s monetary unit is defined as a specific weight of gold. There is “free” coinage of gold, meaning that anyone can present gold bullion to the government to be minted into gold coins of the appropriate denomination in unlimited quantities (perhaps with a small charge for the service). Going the other way, to the extent that there are paper notes or token coins issued by the government as official money, these can be presented by anyone for immediate redemption in full-bodied gold coins. Finally, under a genuine gold standard, there are no restrictions on the flow of gold into and out of the country, so that foreigners too can avail themselves of the options described above.

To this day, arguments over the gold standard are not merely technical disagreements concerning economic analysis. Rather, the gold standard often serves as a proxy for “sound money,” which was a central element in the classical liberal tradition of limiting government’s ability to wreak havoc on society. As Ludwig von Mises explains:

It is impossible to grasp the meaning of the idea of sound money if one does not realize that it was devised as an instrument for the protection of civil liberties against despotic inroads on the part of governments. Ideologically it belongs in the same class with political constitutions and bills of rights. The demand for constitutional guarantees and for bills of rights was a reaction against arbitrary rule and the nonobservance of old customs by kings. The postulate of sound money was first brought up as a response to the princely practice of debasing the coinage. It was later carefully elaborated and perfected in the age which—through the experience of the American continental currency, the paper money of the French Revolution and the British restriction period—had learned what a government can do to a nation’s currency system. (bold added)

It should go without saying that in the present chapter, we are not offering a comprehensive history of the gold standard, even from the limited perspective of the United States. Rather, we merely attempt to explain its basic mechanics, and to highlight some of the major events in the world’s evolution from a global monetary system based on market-produced commodity money to our current framework, which rests on government-issued fiat monies.

The Precious Metals: The Market’s Money

In the previous chapter, we explained that money wasn’t planned or invented by a wise king, but rather emerged spontaneously from the actions of individuals. We also explained why historically people settled on the precious metals, gold and silver, as the preeminent examples of commodity money.

In more recent times—specifically after 1971, as we will document later in this chapter—most people on Earth use unbacked fiat money, issued by various governments (or central banks acting on their behalf), which is not redeemable in any other commodity.

Yet between these two extremes there was a long period when governments issued sovereign currencies that were defined as weights of gold and/or silver. In the US, coins stamped with certain numbers of dollars would actually contain the appropriate gold or silver content, such as a $20 Double Eagle gold coin containing 0.9675 troy ounces of gold. Furthermore, after the US government began the practice of issuing paper notes of various dollar denominations, anyone could present the paper for redemption in the corresponding full-bodied coins. Even during periods when specie redemption was suspended—as often happened during wars—the public generally assumed (correctly) that the government paper currencies would eventually be linked back to the precious metals, and this expectation helped anchor the value of the paper money.

Explainer: “Fixed” Exchange Rate vs. Government Price-Fixing

When multiple countries participate in a gold standard, it is typical to say their governments have adopted a regime of “fixed exchange rates,” where the various sovereign currencies trade against each other in constant ratios.

In contrast, economists such as Milton Friedman have written persuasive essays3 making the case for flexible or “floating” exchange rates, in which governments don’t intervene in currency markets but rather let supply and demand determine how many pesos trade for a dollar. Part of Friedman’s argument is that when governments do try to “fix” the value of their currency—usually propping it above the market-clearing level—it leads to a glut of the domestic (overvalued) currency and shortages of (undervalued) foreign exchange. So if economists are opposed to price-fixing when it comes to the minimum wage and rent control, shouldn’t they also oppose it in the currency markets?

Although Friedman himself obviously understood the nuances, this type of reasoning might mislead the average reader. Under a gold standard, governments don’t use coercion to “fix” exchange rates between different currencies. So the policy here is nothing at all like governments setting minimum wages or maximum apartment rents, where the “fixing” is accomplished through fines and/or prison time levied on individuals who transact outside of the officially approved range of prices.

Instead, under a gold standard, each government makes the standing offer to the world to redeem its own paper currency in a specified weight of gold. This offer is completely voluntary. No one in the community has to exchange currency notes for gold; people merely have the option of doing so.

However, given that two different governments pledge to redeem their respective currencies in definite weights of gold, it is a simple calculation to determine the “fixed” exchange rate between those two currencies. For example, in the year 1913—near the end of the era of the classical gold standard—the British government stood ready to redeem its currency at the rate of £4.25 per ounce of gold, while the US government would redeem its currency at the rate of (approximately) $20.67 per ounce of gold. These respective policies implied—using simple arithmetic—that the exchange rate between the currencies was “fixed” at about $4.86 per British pound. Yet this ratio wasn’t maintained by coercion, and the actual market exchange rate of dollars for pounds did in fact deviate from the anchor point of $4.86. It’s just that if the market exchange rate moved too far in either direction, it would eventually become profitable for currency speculators to ship gold from one country to the other, in a series of trades that would push the market exchange rate back toward the “fixed” anchor point.

To see how this works, suppose that the US government (back in 1913) began printing new dollars very rapidly. Other things equal, this would reduce the value of the dollar against the British pound. Suppose that when all of the new dollars flooded into the economy, rather than the usual $4.86 to “buy” a British pound, the price had been bid up to $10.

At this price, there would be an enormous arbitrage opportunity: specifically, a speculator could start out with $2,067 and present it to the US government, which would be obligated to hand over 100 ounces of gold. Then the speculator could ship the 100 ounces of gold across the ocean to London, where the gold could be exchanged with the British authorities for £425. Finally, the speculator could take his £425 to the foreign exchange market, where he could trade them for $4,250 (because in this example we supposed that the dollar price of a British pound had been bid up to $10 in the forex market). Thus, in this simple tale, our speculator started out with $2,067 and transformed it into $4,250, less the fees involved in shipping.

Besides reaping a large profit, the speculator’s actions in our tale would also have the following effects: (a) they would drain gold out of US government vaults, providing the American authorities with a motivation to stop with their reckless dollar printing, (b) they would add gold reserves to British government vaults, providing the British authorities with the ability to safely print more British pounds, and (c) they would tend to push the dollar price of British pounds down, moving it from $10 back toward the anchor price of $4.86.

To be sure, I’ve exaggerated the numbers in this simple example to keep the arithmetic easier. In reality, as the dollar weakened against the British pound, it would hit the “gold export point” well before reaching $10. Through the arbitrage process we explained above, whenever the actual market exchange rate strayed too far above the $4.86 anchor, automatic forces would set in causing gold to flow out of US vaults and push the market exchange rate back toward the “fixed” rate. (This process would happen in reverse if the exchange rate fell too far below the $4.86 anchor and crossed the “gold import point”: gold would flow out of the United Kingdom and into American vaults, and set in motion processes that would push the exchange rate back up toward the anchor point.)

We have spent considerable time on this mechanism to be sure the reader understands exactly what it means to say there were “fixed exchange rates” under the classical gold standard. To repeat, these were not based on government coercion, and did not constitute “price-fixing” by the government. No shortages of foreign exchange occur under a genuine gold standard, because exchange rates are always freely floating, market-clearing rates.

It is difficult for us, growing up in a world of fiat money, to appreciate the fact, but historically people viewed gold (and silver) as the actual money, with sovereign currencies being defined as weights of the precious metals. As Rothbard explains:

We might say that the “exchange rates” between the various countries [under the classical gold standard] were thereby fixed. But these were not so much exchange rates as they were various units of weight of gold, fixed ineluctably as soon as the respective definitions of weight were established. To say that the governments “arbitrarily fixed” the exchange rates of the various currencies is to say also that governments “arbitrarily” define 1 pound weight as equal to 16 ounces or 1 foot as equal to 12 inches, or “arbitrarily” define the dollar as composed of 10 dimes and 100 cents. Like all weights and measures, such definitions do not have to be imposed by government. They could, at least in theory, have been set by groups of scientists or by custom and commonly accepted by the general public.

In concluding this section, we can agree with Milton Friedman that in a world of governments issuing their respective fiat monies, coercive government ceilings or floors in the foreign exhange market—enforced through fines and/or prison sentences—will lead to the familiar problems characteristic of all price controls. As Rothbard conceded, “the only thing worse than fluctuating exchange rates is fixed exchange rates based on fiat money and international coordination.”

However, the advocates of a genuine international gold standard stress that its underlying regime of (implied) fixed exchange rates would be even better, because it would effectively allow individuals around the world to benefit from the use of a common money. That is to say, for all the reasons that domestic commerce within the United States is fostered through the common use of dollars, commerce and especially long-term investment between countries will be enhanced when no one has to worry about fluctuating exchange rates on top of the other variables.

Colonial Era through 1872: Gold and Silver “Bimetallism”

Because the original thirteen American colonies were part of the British Empire, their official money was naturally that of Great Britain—pounds, shillings, and pence—which at the time was officially on a silver standard. (Indeed, the very term “pound sterling” harkens back to a weight of silver.) Yet the colonists imported and used coins from around the world, while those in rural areas even used tobacco and other commodities as money.6

During the Revolutionary War, the Continental Congress issued unbacked paper money called Continental currency. The predictable price inflation gave rise to the expression “not worth a Continental.” (We will cover this episode in greater detail in chapter 9.)

Among the foreign coins circulating among the American colonists, the most popular was the Spanish silver dollar. This made the term “dollar” common in the colonies, explaining why the Continental currency was denominated in “dollars” and why the US federal government—newly established under the US Constitution—would choose “dollar” as the country’s official unit of currency.7

It is crucial for today’s readers to understand that from the inception of the modern (i.e., post-Constitution) United States in the late 1780s through the eve of the Civil War in 1861, the federal government issued currency only in the form of gold and silver coins. (The one borderline exception were the limited issues of Treasury Notes first used in the War of 1812, which were short-term debt instruments that earned interest and did not enjoy legal tender status, but of which the small denominations of the 1815 issues did serve as a form of paper quasi money among some Americans.8)

In this early period, banks were allowed to issue their own paper notes that were redeemable in hard money and, to the extent that they were trusted, might circulate in the community along with full-bodied coins, but these banknotes were not the same thing, economically or legally, as gold or silver dollars. In summary, for the first seventy-odd years after the modern federal government’s creation, official US dollars consisted in actual gold and silver coins that regular people carried in their pockets and spent at the store. Indeed, so bad was the constitutional framers’ experience with the Continental currency, that they included in the Contract Clause the prohibition that “No State shall…make any Thing but gold and silver Coin a Tender in Payment of Debts.”

In the Coinage Act of 1792, the US dollar was defined as either 371.25 grains of pure silver or 24.75 grains of pure gold, which officially established a gold-silver ratio of exactly 15 to 1. Part of the rationale for this policy of “bimetallism”—in which new coins (of various denominations of dollars) could be minted from either of the precious metals—was that silver coins were convenient for small denominations (including fractions such as a half dollar, quarter dollar, dime, etc.), while gold coins were preferable for larger denominations (such as $10 and $20 pieces). By providing “dollars” consisting of both small-value silver coins and high-value gold coins, the idea was that bimetallism would allow Americans to conduct all of their transactions in full-bodied coins (without resort to paper notes or token coinage).

However, the problem with bimetallism is the phenomenon known as Gresham’s law, summarized in the aphorism “Bad money drives out good.” Specifically, when a government defines a currency in terms of both silver and gold, unless the implied value ratio of the two metals is close to the actual market exchange rate, one of the metals will necessarily be overvalued, while the other is undervalued. People then only spend the overvalued metal, while hoarding (or melting, or sending abroad) the undervalued metal.

In the case of the United States, when it established the 15-to-1 ratio in 1792, this was actually close to the actual market exchange rate between gold and silver. However, increased silver production led to a gradual erosion of the world price of silver, moving the actual market ratio closer to 15½ to 1. (This familiar ratio was partly held in place by France’s own bimetallic policy following the French Revolution, maintained by the French government’s large reserves of both metals.9)

As the world price of silver slipped relative to gold, the gap between market values and the US dollar’s definition eventually became so large that only silver was presented to the Mint for new coinage, while existing gold coins disappeared from commerce. As Rothbard reports: “From 1810 until 1834, only silver coin…circulated in the United States.”10 For a modern example of Gresham’s law in action, the reader can reflect that one would be a fool today to spend a pre-1964 quarter in commerce, since its silver content is worth far more than twenty-five cents.

The Coinage Acts of 1834 and 1837 revised the (implied11) content of the gold dollar down to 23.22 grains of pure gold, while leaving the silver dollar at 371.25 grains. Because there are 480 grains in a troy ounce, these definitions of the metallic content of the dollar implied a gold price of (approximately) $20.67 per ounce, and an unchanged silver price of (approximately) $1.29.

Thus the revised gold content of the dollar moved the gold/silver ratio to just under 16 to 1. This was now higher than the global price ratio of (roughly) 15½ to 1, meaning that the new definition favored gold and undervalued silver. Consequently, little silver was brought to the US Mint to be turned into new coinage—since the market value of the metal in a “silver dollar” coin was higher than $1—and the US, though still officially committed to a bimetallic standard, after 1834 flipped from a de facto silver standard to a de facto gold standard.

When the United States fell into Civil War in 1861, both sides resorted to the printing press and suspended specie payment. The North famously issued inconvertible paper notes called “greenbacks,” which led to large-scale price inflation. (We will cover this and other famous episodes of inflation in greater detail in chapter 9.)

US Participation in the Classical Gold Standard, 1873/1879–1914

The classical gold standard refers to the period beginning in the late nineteenth century when a growing number of countries tied their currencies to gold. Because the process was gradual, it is difficult to state precisely when the period began: “In 1873 there were some nine countries on the gold standard; in 1890, 22 countries; in 1900, 29 countries; and in 1912, 49 countries.”12

Recall from the previous section that going into the Civil War, the US dollar was defined in grains of the precious metals that implied a mint price of either $20.67 per troy ounce of gold, or of $1.29 per troy ounce of silver, for a gold-silver ratio of about 16 to 1. Because world prices of gold and silver were closer to 15½ to 1, there was little incentive to bring silver to the US Mint for conversion into coins.

Consequently, there was little opposition in 1873 when Congress discontinued the “free coinage” of the standard silver dollar (free coinage of fractional dollar silver coins having ended in 1853),13 as there had been little demand for the option. However, later in the decade, when world silver prices dropped—partly as a result of silver discoveries and partly as a result of other countries demonetizing silver, particularly the German Empire—the change of policy would be viewed in a different light. Indeed, pro-silver interests eventually referred to the momentous event as “the Crime of ’73.”14

The 1873 policy change, along with the growing limitations on the legal tender status of existing silver coins completed by 1874, officially ended the era of bimetallism in the United States:15 silver had been demonetized, rendering America a gold standard country. However, because the US remained in the “greenback” period left over from the Civil War, it actually was on neither metallic standard at the time, as it had suspended specie payment. Consequently, it can be argued that the US was not truly a participant in the classical gold standard until 1879, when the government resumed specie payment in gold (as required in the 1875 Specie Payment Resumption Act).

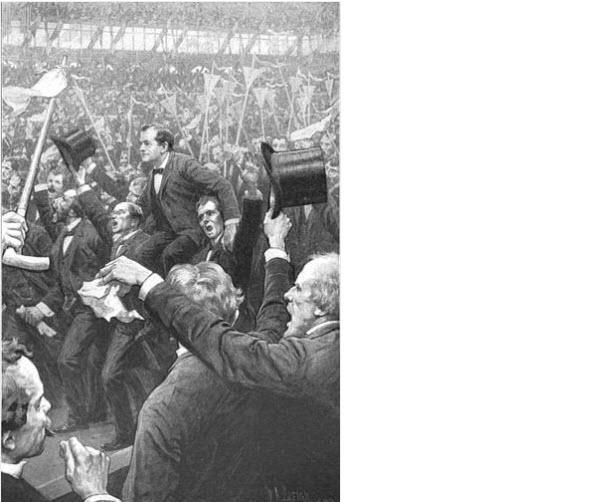

Artist’s conception of William Jennings Bryan after the Cross of Gold speech at the 1896 Democratic National Convention. McClure’s Magazine, April 1900

There was much drama in the battle between silver and gold interests, most notably William Jennings Bryan’s famous “Cross of Gold” speech—which called for a return to bimetallism and the free coinage of silver as a method of helping indebted farmers at the expense of the Wall Street elites—delivered at the 1896 Democratic National Convention, where he was nominated for president. Yet Bryan lost the general election to the pro-gold Republican William McKinley, who signed the Gold Standard Act of 1900 into law. This legislation codified the definition of the gold dollar that had been established back in 1837, which (we recall) implied a dollar/gold price of about $20.67 per ounce. This was the dollar’s gold content throughout the classical gold standard period, and would prevail until FDR’s devaluation in 1933/1934, described later in this chapter.

Although many modern economists scoff at the gold standard, in its “classical” heyday it was a quite remarkable achievement. Economic historian Carl Wiegand writes: “The decades preceding the First World War were characterized by a degree of economic and personal freedom rarely, if ever, experienced in the history of mankind.” He goes on to explain, “An essential part of this system was the gold standard.”

To give a flavor of this unrivalled degree of freedom before the Great War, consider this description from the famous economist Oskar Morgenstern:

[T]here was freedom of travel without passports, freedom of migration, and freedom from exchange control and other monetary restrictions. Citizenship was freely granted to immigrants…capital would move unsupervised in any direction….There were hardly any quantitative restrictions on international trade…[I]t was a world of which recently many…would have been inclined to assert that it could not be created because it could never work.

Alas, among the casualties of the world war would be the classical gold standard and its associated freedoms.

World War I and Its Aftermath

If the beginning of the classical gold standard is up for scholarly dispute, everyone agrees that it ended with World War I. Indeed, the Great War was only possible because the major governments abandoned their commitment to gold. As Melchior Palyi explains:

“This war cannot last longer than a few months” was a widely held conviction at the outset of World War I. All involved would go “bankrupt” shortly and be forced to come to terms, perhaps without a decision, on the battle fields. The belligerents would simply cease to be credit-worthy. Such was the frame of the European mind in 1914; the idea that credit and the printing press might be substituted for genuine savings was “unthinkable.” “Sound money” ruled supreme, supported by the logic of the free market. (bold added)18

Many commentators use war or other emergencies as examples of the problem with a strict gold standard—it allegedly ties the hands of government to respond in a crisis. However, that is an odd way of framing the matter. After all, printing unbacked fiat money doesn’t actually give the government access to extra tanks, bombers, and artillery; those all come from real resources, the availability of which is not directly affected by the quantity of paper money.

To say that World War I would have been “unaffordable” on the classical gold standard really just means that the citizens of the countries involved wouldn’t have tolerated the huge increases in explicit taxation and/or regular debt issue to pay for the conflict. Instead, to finance such unprecedented expenditures, their governments had to resort to the hidden tax of inflation, where the transfer of purchasing power from their peoples would be cloaked in rising prices that could be blamed on speculators, trade unions, profiteers, and other villains, rather than the government’s profligacy. This is why Ludwig von Mises said that inflationary finance of a war was “essentially antidemocratic.”

In light of these realities, when joining the war the major belligerents all broke from the gold standard, although the United States’s deviation consisted only in President Wilson embargoing the export of gold bullion and coin in 1917.20 After the war, the major powers made halfhearted attempts to restore some semblance of the international gold standard, but these were counterfeit versions (as we will detail below). The First World War thus dealt a mortal blow to the gold standard from which it never recovered.

We should take a moment in our discussion to explain the role of central banks, which also saw a major break with the start of the war. Although central banks were not necessary for the administration of the classical gold standard—the Federal Reserve didn’t even exist until late 1913—those countries that had central banks expected them to be independent from narrow political matters. Although the central banks engaged in discretion in influencing interest rates and providing credit with the aim of—in our modern terminology—smoothing out the business cycle, they were ultimately bound by the “rules of the game” of the international gold standard and had to protect their gold reserves.

Once war broke out, however, not only was the link to gold severed, but the role of the central bank changed as well. The central banks of the belligerent powers all became subservient to the fiscal needs of their respective governments. As American economist Benjamin Anderson described the wartime operations of the British and American central banks (and note that in later chapters we will elaborate on the mechanisms Anderson mentions):

The [British] Government first borrowed from the Bank of England on Ways and Means Bills, and the Bank bought short term Treasury Bills also. This had the double purpose of giving the Government the cash it immediately needed, and of putting additional deposit balances with the Bank of England into the hands of the Joint Stock Banks….This increased the volume of reserve money for the banking community and made money easy, permitting an expansion of general bank credit which enabled the banks to buy Treasury Bills and Government bonds.…[T]he exigencies of war justified everything…

Speedily, too, the British financial authorities learned the process of regulating outside money markets in which they wished to borrow…especially, the New York money market. If an issue of bonds of the Allies…was to be placed in our [US] market, it was preceded by the export of a large volume of gold, accurately timed, to increase surplus reserves in the New York banks and to facilitate an expansion of credit in the United States which would make it easy for us to absorb the foreign loan. (bold added)

After the wartime experience, the “traditional gold standard had ceased to be ‘sacrosanct,’” in the words of Palyi. “Events proved, supposedly, that mankind could prosper without it.” After all, if the gold standard could be violated and central banks could use their discretionary powers to help with the war effort, why not do the same for other important social goals, like promoting economic growth and reducing inequality?

Because of the severe wartime price inflation, after the 1918 armistice the major powers desired a return to gold. However, resuming specie payment at the prewar parities would have proven very painful, since their currencies had been inflated so much during the war. The United States, for its part, ended the embargo on gold export in 1919, but in order to staunch the resulting outflow of gold and maintain the prewar dollar-gold ratio, the Federal Reserve was forced to raise interest rates and massively contract credit, resulting in the Depression of 1920–21.

At the 1922 Genoa Conference, a plan was hatched for a “gold exchange standard,” in which central banks around the world could hold financial claims on the Bank of England and Federal Reserve as their reserves, rather than physical gold. However, so long as the Bank of England and the Fed themselves stood ready to redeem sterling and dollar assets in gold, there was still some discipline imposed on the system.

Yet even here, the redemption policy was only effective for large amounts and hence only relevant for large institutions, as opposed to the universal policy under the classical gold standard. As Selgin explains:

A genuine gold standard must…provide for some actual gold coins if paper currency is to be readily converted into metal even by persons possessing relatively small quantities of the former. A genuine gold standard is therefore distinct from a gold “bullion” standard of the sort that several nations, including the United States, adopted between the World Wars. The Bank of England, for example, was then obliged to convert its notes into 400 fine ounce gold bars only, making the minimum conversion amount, in ca. 1929 units, £1,699, or $8,269. (bold added)

The interwar gold exchange standard sought to “economize” on gold holdings: rather than storing physical gold in central bank vaults around the world, only the United States and Great Britain needed to redeem their currencies in the yellow metal, while the rest of the world could stockpile paper claims against the financial titans. Yet this system was very fragile, relying on cooperation among the central banks so as not to threaten the smaller gold reserves that were doing much more “work” than they had under the classical standard.

As an example of the necessary coordination, when then chancellor of the Exchequer Winston Churchill in 1925 sought to resume specie payments at the prewar parity and restore the pound to its traditional value of $4.86, in order to avoid a massive deflation in British prices, the Federal Reserve (under the leadership of Benjamin Strong) agreed to an easy-credit policy, thus weakening the dollar in order to close some of the gap between the currencies. Economists of the Austrian school argue that the Fed’s loose policies of the 1920s helped fuel an unsustainable boom that led to the 1929 stock market crash.

The Great Depression and Bretton Woods

In the depths of the Great Depression, the newly inaugurated president Franklin D. Roosevelt euphemistically declared a “national bank holiday” on March 6, 1933, in response to a run on the gold reserves of the New York Fed. During the week-long closure, FDR ordered the banks to exchange their gold holdings for Federal Reserve notes, to cease fulfilling transactions in gold, and to provide lists of their customers who had withdrawn gold (or “gold certificates,” which were legal claims to gold for the bearer) since February of that year.

FDR would issue an even more draconian executive order on April 5, 1933, which required all citizens to turn in virtually all holdings of gold coin, bullion, and certificates in exchange for Federal Reserve notes, under penalty of a $10,000 fine and up to ten years in prison. Although US citizens couldn’t buy gold, foreigners still traded in the world market, and there the US dollar now fluctuated against the metal, the $20.67 anchor having been severed. The Roosevelt administration in 1934 officially devalued the currency some 41 percent by locking in a new definition of the dollar that implied a gold price of $35 per troy ounce. However, this redemption privilege was only offered to foreign central banks; American citizens were still barred from holding gold, and even from writing contracts using the international price of gold as a benchmark.

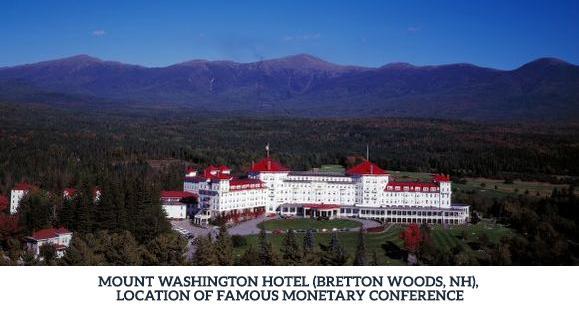

As the Allied victory in World War II became more certain, the Western powers hammered out the postwar monetary arrangements in the famous Bretton Woods Conference, a nineteen-day affair held at a New Hampshire hotel which led to the creation of the International Monetary Fund (IMF) and World Bank. Following the war, the global financial system would rest on a refined gold exchange standard in which the US dollar—rather than physical gold—displaced sterling and became the sole reserve asset held by central banks around the world.

Under the Bretton Woods system, other countries could still hold gold reserves, but they typically defined their currencies with respect to the US dollar and dealt with trade imbalances by accumulating dollar assets, rather than draining gold from countries with overvalued currencies. In theory the Federal Reserve kept the whole system tied to gold by pledging to redeem for central banks dollars for gold at the new $35/ounce rate, but in practice even central banks were discouraged from invoking this option. Furthermore, governments only gradually lifted restrictions on international transactions following the war, so that the Bretton Woods gold exchange framework—tepid as it was—was really only fully operational by the late 1950s.

The Nixon Shock and Fiat Money

The US government relied on Federal Reserve monetary inflation to help finance the Vietnam War and the so-called War on Poverty. For a while other central banks were content to let their dollar reserves pile up, but French authorities eventually blinked in 1967, when they began to request the transfer of gold from New York and London to Paris. By 1968 the Americans had capitulated and let the unofficial market price of the dollar deviate from the official Bretton Woods value, relying on diplomatic pressure to dissuade other governments from exploiting the discrepancy and “running” on the Fed’s increasingly inadequate gold reserves.

Eventually the weight became too much to bear, and President Richard Nixon formally suspended the dollar’s convertibility on August 15, 1971. Along with other interventions in the economy (such as wage and price controls), this official closing of the gold window has been dubbed the “Nixon shock.”

Although Nixon assured the public that the gold suspension would be temporary, and that his policy would stabilize the dollar, neither promise would be fulfilled. From this point forward, the US—and hence the rest of the world—would operate on a purely fiat monetary system.